Testability Framework Summary

The Menditect Testability Framework provides a structured and comprehensive architectural approach for Mendix applications, aiming to achieve high Maintainability and Structural Quality through enhanced Testability of microflows (nanoflows will be added later). By adopting a "design for test" (DFT) approach, the framework seeks to reduce reliance on costly, fragile and maintenance intensive end-to-end testing, prioritizing an efficient Testing Pyramid strategy.

The Menditect Testability Framework is provided as open-source content under the Creative Commons Attribution 4.0 International License (CC BY 4.0). It provides architectural guidelines, standardized patterns, and clear principles designed to improve the structural quality of Mendix applications. By adopting this framework, teams can enhance not only testability but maintainability, adaptability, and data quality as well. This summary serves as a high-level guide to the framework's core concepts.

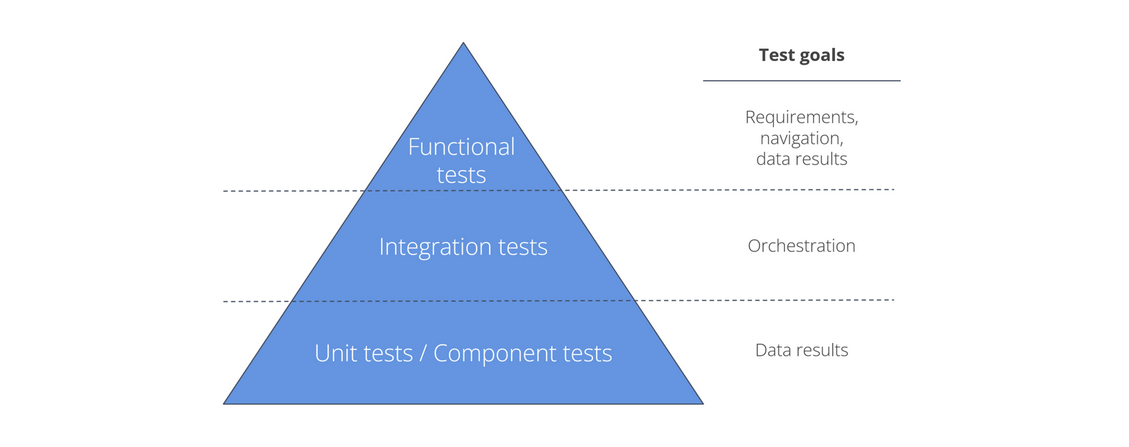

1. The Testing Pyramid in Mendix

The framework recommends a strategy for applying the Testing Pyramid, which prioritizes a high volume of fast, low-level tests and a smaller number of slower, high-level tests. This makes testing more efficient and less reliant on functional UI tests.

The phrase “high volume of fast, low-level tests” should not be interpreted as a requirement to achieve 100% unit-test coverage. Many units carry a low level of risk and therefore do not warrant extensive testing. A risk-based approach is preferable for determining which test types should be implemented and where testing efforts should be prioritized.

The framework adapts traditional test types for the Mendix context:

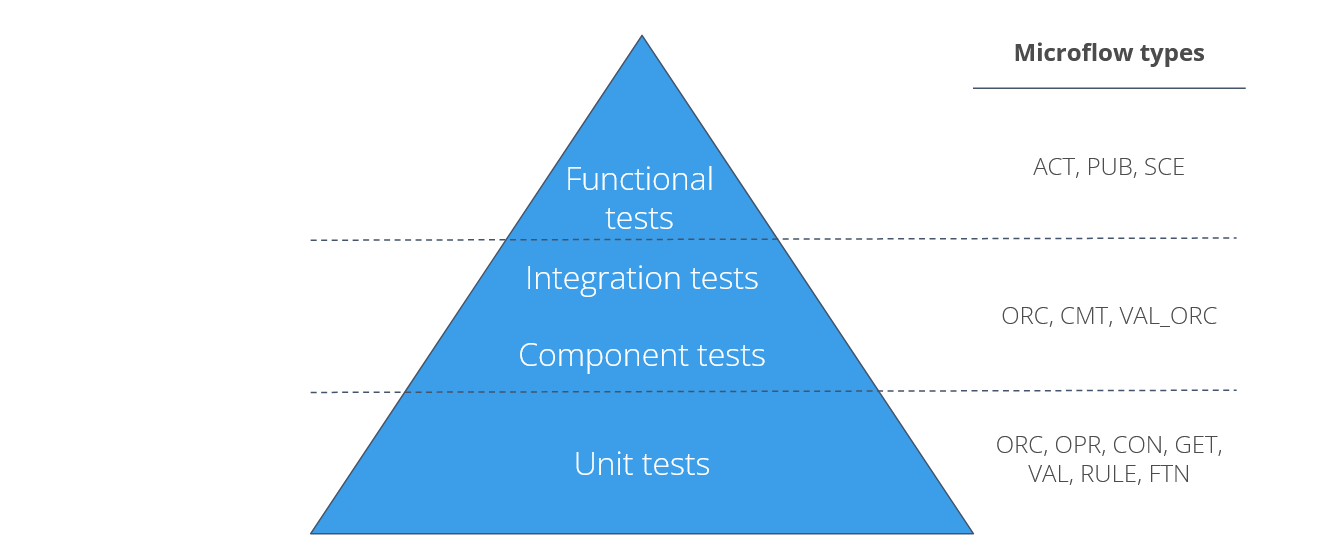

- Unit Tests: Verify the smallest isolated microflows to ensure predictable data results for all boundary values.

- Component Tests: Test the expected data result of an isolated component by focusing on critical business scenarios rather than full edge case coverage.

- Integration Tests: Validate the correct execution order of units, focusing on the flow and decisions (choice nodes) of Orchestration microflows, often using the TestLogger to assert the execution path.

- Functional Tests: Highest-level, black-box tests executed via the public interfaces to confirm the system meets external requirements.

2. Core Principles: A Foundation for Testable Design

The framework is built upon two foundational software design principles that pursue Isolatability, the hallmark of a testable design:

- Separation of Concerns (SoC): This principle involves dividing the application into distinct layers and components, each addressing a specific concern (e.g., user interface, business logic, data access). This manages complexity and minimizes functional overlap, making the system easier to test in isolation.

- Dependency Injection (DI): This pattern makes components more independent and testable by providing them with their dependencies (such as data or other objects) from an external source, rather than having them create or retrieve data themselves.

These principles lead to three elaborated areas of knowledge; the introduction of Microflow Typologies, a Testable App Architecture and Ensuring data quality by the implementation of ACID principles.

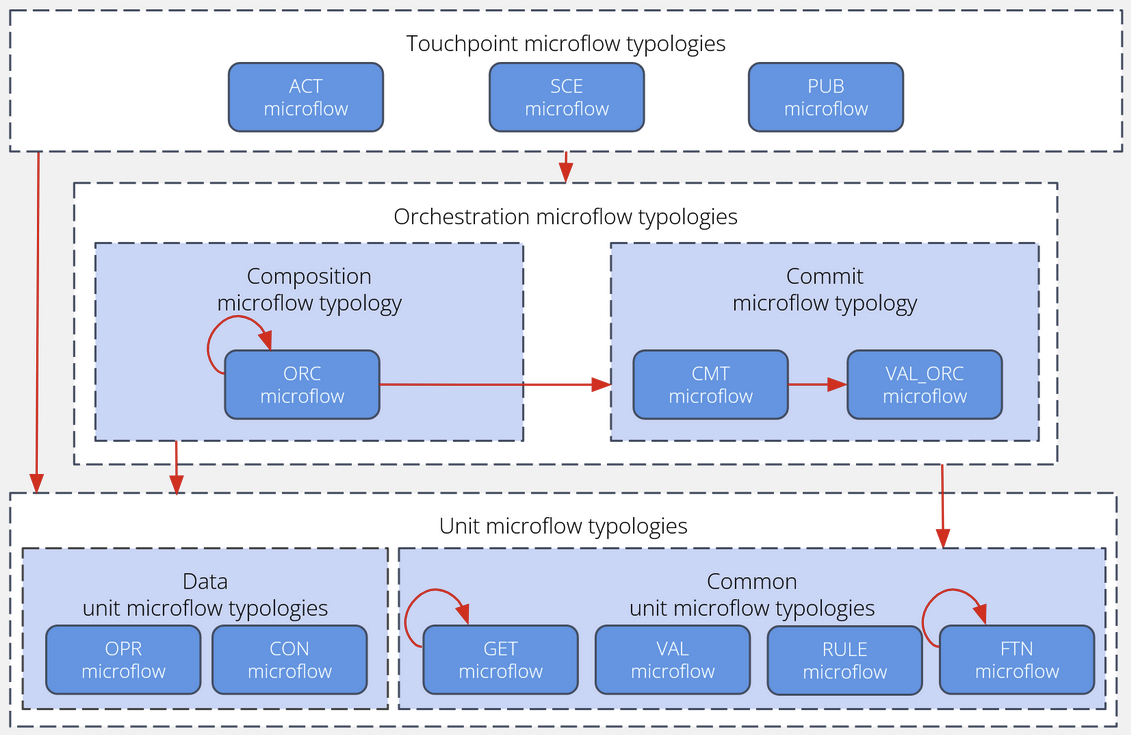

3. Microflow Typologies

The Microflow Typologies in the framework standardize the purpose, pattern, location, and naming of microflows, implementing principles like SoC and DI.

This standardization classifies microflows into Unit microflow types, which represent the smallest, measurable units of logic responsible for data manipulation, retrieval, and validation. Orchestrations compose the execution of Units via call-microflow actions but are never responsible for changing or creating values themselves. Touchpoint types handle triggers from external interfaces and are responsible for navigational actions and refreshing the Client Cache (Browser).

The framework assigns microflows a three-capital-letter prefix (e.g., ACT_, ORC_, OPR_) to indicate its type, defining its clarified role and allowed architectural call hierarchy. The call hierarchy defines which microflow type is allowed to call other microflow types (a red arrow shows what is an allowed call).

This structure is essential because it enables microflows to be linked to the testing pyramid, allowing the development team to enhance test coverage.

4. A Testable Application Architecture

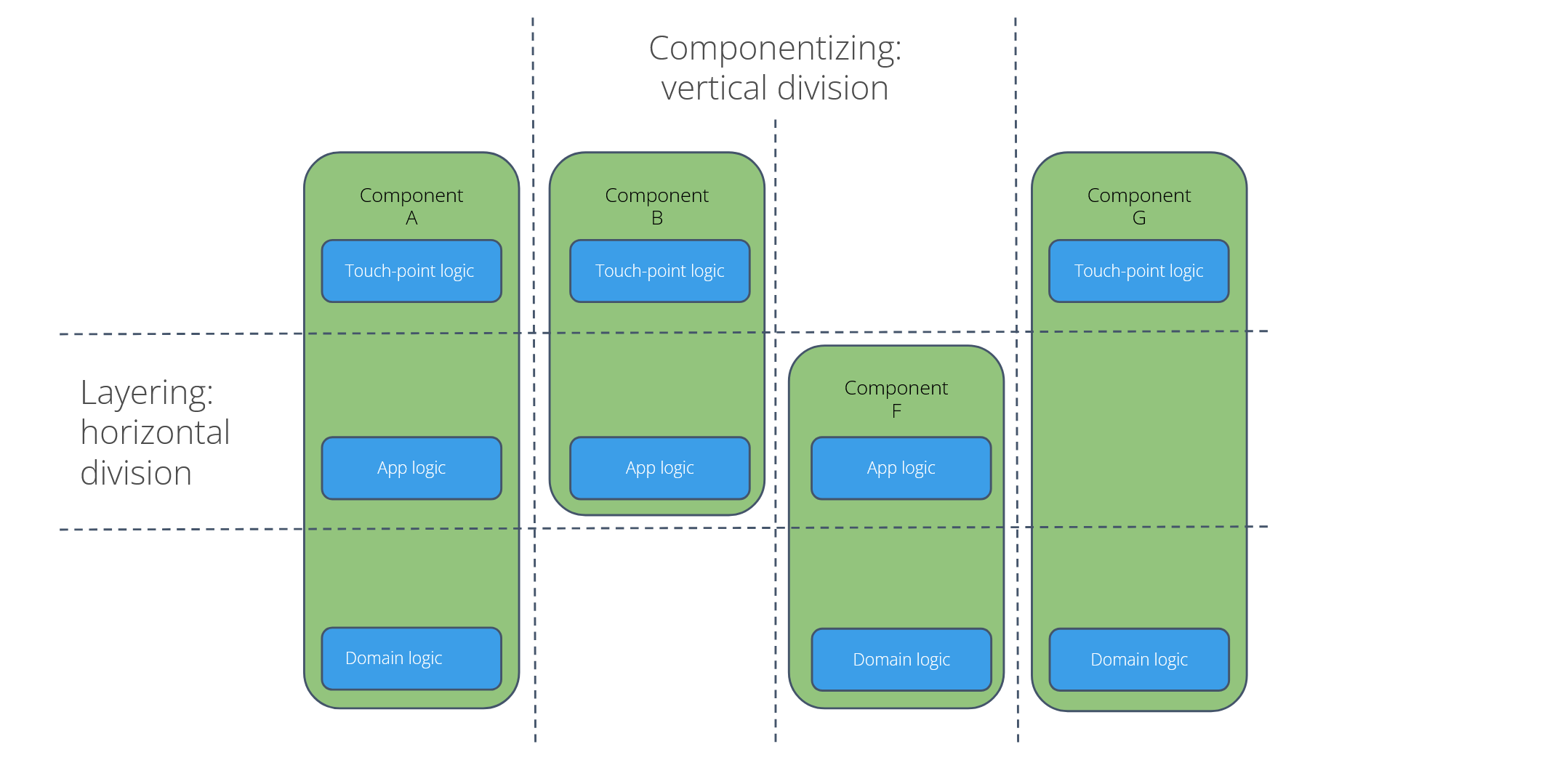

While the Microflow Typologies give a first organization of your application, the architecture of large applications need more structure. Therefore, the framework introduces both horizontal and vertical divisions of the implemented microflows and a way to encapsulate inside logic with private microflows.

4.1 Horizontal Division (Layering)

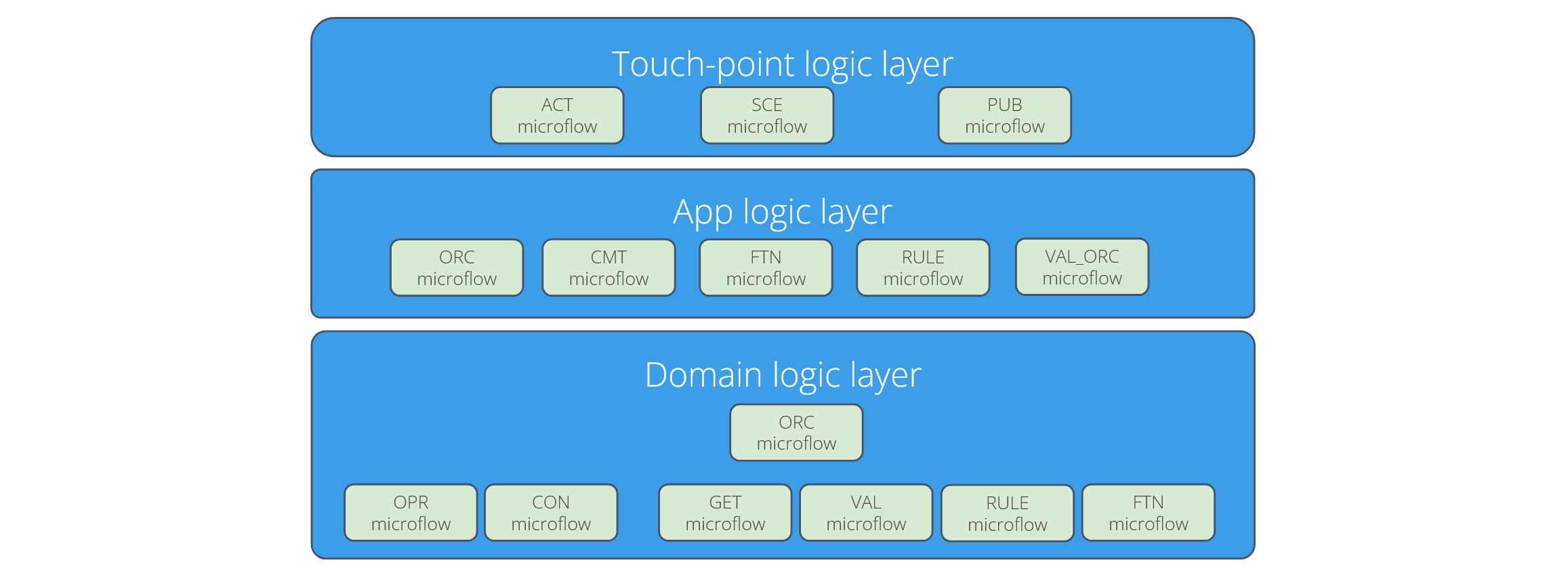

The application is structured into three distinct layers, each with a specific responsibility. A strict, one-way call hierarchy is enforced, where higher layers can call lower layers, but not vice-versa, preventing circular dependencies.

- Touchpoint Layer: Manages all external interactions, such as user interfaces (pages), published APIs, and scheduled events. This layer corresponds fully with the Touchpoint microflow typology layer.

- App Logic Layer: This layer contains the core business processes and orchestrates the flow of logic between the Touchpoint Layer and the Domain Layer. It includes the orchestration microflow typologies (

ORC), committersCMT, and validation orchestrationsVAL_ORC), and may also contain functions (FTN) and rules (RULE) that are not related to maintaining data consistency but to process control. - Domain Layer: This layer encapsulates all unit microflow types, and may also include orchestration microflows (ORCs) when they are required to maintain data consistency. For example, an orchestration microflow at the domain-logic level might handle the creation of a unique order line for an order. In this case, the orchestration must first retrieve the existing order lines to verify whether an order line with the unique attribute already exists.

4.2 Vertical Division (Componentizing):

The application is organized into functional components, each encapsulating a specific business capability (such as Order Management). These components are typically implemented as separate Mendix modules to maintain clear boundaries, although a dedicated folder structure can be used instead. Each component also follows an internal layering pattern. The diagram below illustrates the most common layering variations.

4.3 Encapsulation with Public and Private Microflows:

To enforce separation between the layers and the components, the framework distinguishes between public and private microflows.

Private microflows (prefixed with an underscore _) contain internal logic that cannot be accessed from outside the component or layer in which they reside. For example, the orchestration microflow ORC_orderline_save, which saves an order line, may call a sub-microflow ORC_order_set_amount to calculate the total amount on the associated order. Since this sub-microflow should only be triggered during the save process, it is made private and named _ORC_order_set_amount.

Public microflows act as the formally exposed entry points of a module or component. They define the stable, supported ways in which external modules, pages, or microflows are allowed to interact with the encapsulated logic. By calling only these public microflows - rather than internal implementation microflows - other parts of the application gain controlled, predictable access without bypassing the module’s intended boundaries.

Any microflow type can be marked as private, but in practice this is most commonly done for orchestration microflows.

5. Ensuring Data Quality with ACID Principles

While the Mendix platform inherently handles the Isolation (I) and Durability (D) aspects of ACID transactions, Atomicity (A) and Consistency (C) must be implemented by the developer. The framework provides patterns to enforce these principles:

- Atomicity (All or Nothing): This is achieved by defining a Scope - a collection of all objects involved in a single business transaction that must be committed or rolled back together. The framework details several patterns for managing this scope and mandates that all database commits are centralized in a dedicated Commit Microflow (

CMT). - Consistency (Valid State to Valid State): This is ensured through a layered validation strategy where authoritative server-side checks of all business rules are executed within the

CMTmicroflow before any data is committed.

6. Helper Components for Enhanced Testability

The framework includes the TestLogger helper component. A lightweight tool used to record the execution path of microflows. This "footprint" of the logic can be used to create powerful and stable assertions for integration and regression tests.